Towards Complex Reasoning: the Polaris of Large Language Models

University of Edinburgh | yao.fu@ed.ac.uk

Started writing on Apr 30 2023

Released on May 01 2023

Last updated on May 09 2023

Other versions: [pdf] [Arxiv] [中文] [bib]

Recently, there are many works on smaller models that achieve inspiring dialog abilities, which makes people imagine if smaller models can have comparable performance to large models like GPT-3.5. Generally, language models have multi-dimensional abilities, which makes them hard to compare. Finding the correct metric is crucial for developing strong language models. At the current stage, the community is eager to know what are the key differentiators that mark the potential of strong language models.

In GPT-4 release blog, the authors write: “In a casual conversation, the distinction between GPT-3.5 and GPT-4 can be subtle. The difference comes out when the complexity of the task reaches a sufficient threshold”. This means that complex tasks are likely to be the key differentiators for large v.s. small language models.

More importantly, complex reasoning opens up opportunities for building a large spectrum of applications upon language models, effectively making language models the next-generation computation platform/ operating system. This has the potential to substantially change the way humans interact with computers and reshape the whole computational ecosystem.

In this post, we take a close look at methods toward models of strong complex reasoning capabilities.

Table of Content

1 - Motivation: LLMs as future-generation computation platform 2 - Recipe for improving large language models’ reasoning 2.1 - Pretraining and continue training 2.2 - Supervised finetuning 2.3 - Reinforcement learning 2.4 - The intriguing alignment between reasoning and coding3 - Prompt engineering for complex tasks3.1 - Basic chain-of-thought prompting 3.2 - Advanced techniques and analytics 4 - Evaluating language models’ reasoning abilities4.1 - Evaluation basics4.2 - Introducing Chain-of-thought hub5 - Conclusion Appendix: More resources in large language model reasoning

1 - Motivation: LLMs as future-generation computation platform

We study complex reasoning for two reasons:

- As mentioned above, complex reasoning is the key differentiator that marks the differences between small and large models, as is discussed by GPT-4 release post.

- Complex reasoning is a core ability that makes it possible for the model to become the next-generation operating system.

The vision to make language models the next-generation operating system is particularly interesting because it opens countless possibilities for building new applications and creating a language model based computational ecosystem (probably even larger opportunities than super apps like ChatGPT). The ability of complex reasoning serves as the foundation because if we want the model to become a new OS, it needs to be able to complete complex instructions through interactions with tools, users, and all elements of the outside environment.

This post studies how to train models of strong complex reasoning, how to do prompt engineering to fully release the model’s reasoning ability, and how to evaluate the models’ reasoning performance. The content of this post is divided as:

- In section 2, we discuss existing recipes for building language models with strong abilities for complex reasoning. The recipe for complex reasoning is similar to the recipe for generic LLM development, consisting of three stages: continue training, instruction finetuing, and reinforcement learning. We further discuss the intriguing alignment between coding and reasoning.

- In section 3, we discuss prompt engineering techniques for complex reasoning. When language models become new-generation operating system kernels, prompt engineering/ in-context learning will become new-generation shell-scripting.

- In section 4, we discuss how to evaluate the reasoning abilities of large language models. We introduce chain-of-thought hub, a suite of 100+ reasoning tasks that clearly marks the differences of large v.s. small models. We highlight the promising performance of LLaMA 65B, which we view has a very strong potential as a base model for reproducing ChatGPT-3.5.

2 - Recipe for improving large language models’ reasoning

The recipe for reasoning closely follows the recipe for building generic large language models and chatbots. There are three stages in total:

- Pretraining / continue training: where one trains a large model on a large dataset, usually scientific literature or code data.

- Supervised finetuning: where one finetunes the model to follow instructions of complex tasks

- Reinforcement learning: where one uses signals like whether the tasks is fully/ partially finished as the reward.

We further recall the hypothesis that training on code also improves the models’ reasoning ability. So during our literature analysis, we simultaneously consider reasoning and coding. We will see that the two are amazingly correlated with each other in terms of learning methods.

2.1 - Pretraining and continue training

We highlight the following works:

- Lewkowycz et. al. 2022. Minerva: Solving Quantitative Reasoning Problems with Language Models

- Continue training PaLM 540B on 38.5B tokens from Arxiv paper.

- Performance on MATH, a hard dataset requiring answering questions using Latex format, is 33.6 (v.s. GPT-4 42.5)

- Taylor et. al. 2022. Galactica: A Large Language Model for Science

- Pretrain a 120B language model on 106B tokens consisting of papers, code, reference material, knowledge bases, and others.

- Performance on MATH is 20.4 (v.s. Minerva 33.6 and GPT-4 42.5)

- Chen et. al. 2021. Codex: Evaluating Large Language Models Trained on Code

- Continue training the 12B GPT-3 checkpoints on 159GB code data leads to clearly improved coding performance measured on the HumanEval dataset.

- Li et. al. 2023. StarCoder: A State-of-the-Art LLM for Code

- Pretrain an 16B model on 1T the Stack data and achieves very inspiring results on HumanEval and MBPP. Also exhibits strong reasoning.

All works find that training on a large corpus of scientific literature/ code significantly improves the base models’ reasoning/ coding abilities.

2.2 - Supervised finetuning

We highlight:

- Chung et. al. 2022. Scaling Instruction-Finetuned Language Models

- Using a diverse set of instructions significantly improves the model’s ability of zero-shot generalization

- Mixing chain-of-thought data within the instruction collections (further discussed in the flan collection) clearly improves models’ chain-of-thought abilities

- Caveat: the flan collection dataset, although elicits base models’ abilities from multiple dimensions, may not directly translate to better chat performance because these instructions are not from real chatbot user interactions.

- Fu et. al. 2023. Specializing Smaller Language Models towards Multi-Step Reasoning

- Distilling chain-of-thought reasoning abilities to models of smaller scales (less or equal than 10B). Generally, models of 10B scales are ideal for deployment (larger models are expensive, smaller models are incapable).

- A lot of engineering details are discussed in this paper, such as data engineering, ability balancing, and differences between small and large models

- Li et. al. 2022. Competition-Level Code Generation with AlphaCode

- Pretrain a 41B model on 715GB of GitHub code then finetune it on the CodeContest dataset of 13k problems

- During testing, use sampling and filtering out the solution base on if passes the examples tests. This practice is in a sense, similar to the self-consistency approach in reasoning problems.

The current understanding of instruction tuning is:

- It is relatively easy to tune a base model into a chatbot simply using dialog formatted data (see great examples like Alpaca and MOSS). Yet the ability to chit-chat does not translate to abilities to perform complex tasks. From this perspective, models are like humans: talk is cheap, show me the code.

- The instruction tuning problem, in practice, is a data mixing problem: how to best mix instruction data from different sources such that it uniformly improves model performance from all perspectives (rather than increasing one dimension but decreasing the other, as discussed in CoT specialization and the flan collection).

- A quick safe starting point for data mixing is: use 10-20 shots data points of non-chain-of-thought data (to balance abilities of different dimensions) but use as many chain-of-thought data as possible (to maximize reasoning abilities).

2.3 - Reinforcement learning

We highlight:

- Uesato. et. al. 2022. Solving math word problems with process- and outcome-based feedback

- Building reward models based on the intermediate reasoning and the final reasoning results.

- Le et. al. 2022. CodeRL: Mastering Code Generation through Pretrained Models and Deep Reinforcement Learning

- Training reward models based on the signals like compile error, run time error, or whether pass the test.

Both works use the intermediate signals (for reasoning, whether a middle step is correct; for coding, whether the code compiles) and final signals (for reasoning, whether the final answer is correct; for coding, whether the code passes the test) as the reward. Note that this type of RL is different than RLHF because it does not need human feedback.

2.4 - The intriguing alignment between reasoning and coding

In our previous post, we discussed a hypothesis that training on code is likely to improve reasoning abilities because:

ㅤ | Reasoning | Coding |

Data format | Chain-of-thought | Line-by-line comments |

Easy and middle-level task | Step-by-step reasoning | Procedure-oriented programming |

Hard task | Task decomposition | Object-oriented programming |

- Comments on code are naturally existing chain-of-thought data

- Procedure-oriented programming is similar to solving a task step-by-step. This is applicable to tasks of easy and middle complexity

- Object-oriented programming is similar to decomposing a task into smaller tasks then solve them separately. This is applicable to tasks of higher complexity.

This remarkable alignment was initially discussed in Madaan et. al. 2022 in the context of commonsense reasoning, and approximately at the same time (but slightly later) deepened by discussions internally within CMU, AI2, and Google.

Generally, we see that improving reasoning is very similar to improving coding. Here we deepen this hypothesis by highlighting the similarity of the recipes for training large language models for reasoning or coding:

ㅤ | Reasoning | Coding |

Continue training | continue training on scientific literature

data format = text + latex

example: Minerva / Galactica | continue training on code

data format = text + programming language

example: Codex |

Supervised finetuning | SFT using chain-of-thought instructions

data format = chain-of-thought

example: CoT specialization | SFT using instructions about coding

data format = lines of code

example: AlphaCode |

Reinforcement learning | Use process and outcome based reward

reward format = if correct reasoning results

example: process and outcome based reward | Use compile and pass rate as reward

reward format = if correct execution results

example: CodeRL |

Sampling and decoding | Self-consistency: sample multiple solutions then majority vote | Sampling and filtering: sample multiple solutions then filter and cluster the solutions |

We see both reasoning and coding have gone through:

- A continue training stage where one does data scaling on the base model, either with code or scientific literature.

- A supervised finetuning stage where one fine-tunes the model on either instructions asking for finishing complex tasks or writing codes

- A reinforcement learning stage where one uses intermediate reasoning steps/ compile rate and final reasoning results/ pass rate as reward

- During decoding, both reasoning and coding sample multiple solutions then choose the best from the decoding space.

These similarities make the connections between code and reasoning deeply intriguing.

3 - Prompt engineering for complex tasks

Having discussed how to build models of strong reasoning abilities. In this section, we discuss how to prompt the models effectively to fully release the models’ potential.

3.1 - Basic chain-of-thought prompting

The following papers are recommended for beginner

- Wei et. al. 2022. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models.

- This paper is the first paper discovering when prompted with chain-of-thought in-context demonstrations, there exists a phase change showing large models are substantially better than smaller models, which further leads to the discovery of emergent abilities.

- Wang et. al. 2022. Self-Consistency Improves Chain of Thought Reasoning in Language Models

- Majority voting over the sampled CoT reasoning paths significantly improves reasoning performance

- Suzgun et. al. 2022. Challenging BIG-Bench Tasks and Whether Chain-of-Thought Can Solve Them

- Using CoT to tackle challenging big-bench tasks. A meaningful side product of this paper is the BigBench Hard dataset which is very effective in testing models’ reasoning abilities.

3.2 - Advanced techniques and analytics

The following papers discuss advanced CoT prompting practices:

- Fu et. al. 2023. Complexity-Based Prompting for Multi-Step Reasoning

- Use complex chains over simple chains as in-context demonstrations

- Zhou et. al. 2023. Least-to-Most Prompting Enables Complex Reasoning in Large Language Models

- Break down a complex problem into a series of simpler subproblems and then solve them in sequence

- Khot et. al. 2023. Decomposed Prompting: A Modular Approach for Solving Complex Tasks

- Decompose complex tasks into simpler tasks and solve them one-by-one

- Zheng et. al. 2023. Progressive-Hint Prompting Improves Reasoning in Large Language Models

- SOTA performance on MATH dataset (probably the most challenging reasoning dataset) using complex prompt + progressive hint

- Yao et. al. 2023. Tree of Thoughts: Deliberate Problem Solving with Large Language Models

- Exploration over coherent units of text, considering multiple reasoning paths, and facilitating deliberate decision making and strategic lookahead.

Generally, for complex tasks, first decompose them into simpler ones, then solve simpler ones step-by-step.

The following papers discuss prompting the language model in the format/ style of code

- Madaan et. al. 2022. Language Models of Code are Few-Shot Commonsense Learners

- Gao et. al. 2023. PAL: Program-aided Language Models

- Zhang et. al. 2023. Exploring the Curious Case of Code Prompts

This technique is probably the weirdest prompt engineering technique: format a natural language task into pseudo code, then use it to prompt a language model whose training data involves code, then the model’s performance on natural language reasoning can improve

The following papers discuss how to finetune the model to have better in-context learning:

- Min et. al. 2023. MetaICL: Learning to Learn In Context

- Wei et. al. 2023. Symbol tuning improves in-context learning in language models

And the Gradient has an awesome in-context learning literature review:

The following papers discuss how and why in-context learning works:

- Xie et. al. 2021. An Explanation of In-context Learning as Implicit Bayesian Inference

- the LM infers a latent concept between examples in a prompt and enters the corresponding task mode

- Wei et. al. 2023. Larger language models do in-context learning differently

- large models can override semantic priors when presented with in-context exemplars that contradict priors, despite the stronger semantic priors that larger models may hold.

The short take on in-context learning is that the examples in the prompt make the model enter the corresponding task mode and then perform the task.

The following papers discuss the behavior of prompting and chain-of-thought:

- Min et. al. 2022. Rethinking the Role of Demonstrations: What Makes In-Context Learning Work?

- When some labels are wrong, the model can still make correct prediction. This indicates that the model is more influenced by the [form] of the prompt, not the [meaning] of the prompt.

- Wang et. al. 2022. Towards Understanding Chain-of-Thought Prompting: An Empirical Study of What Matters

- Incorrect reasoning in the prompt can still make the model reason correctly, but the relevance of the prompt and order of reasoning steps are more important — which again, indicates that the model is more influenced by the [form] of the prompt, not the [meaning] of the prompt.

- Madaan and Yazdanbakhsh. 2022. Text and Patterns: For Effective Chain of Thought, It Takes Two to Tango.

- Detailed analysis showing the format of the prompt improves CoT reasoning (while the correctness of the content may not play a strong role)

- Zhang et. al. 2023. How Language Model Hallucinations Can Snowball

- ChatGPT and GPT-4 often over-commit to early mistakes, leading to a phenomenon termed as "hallucination snowballing," where they make subsequent false claims, even though they can identify a significant proportion of these mistakes as incorrect.

The short take is the model only looks at the format of the prompt but may not be significantly influenced by the correctness of the prompts. Yet to what extent the model can be influenced by the correctness of the prompt, or how much the prompt can overwrite the model’s prior belief, is a question yet to investigate.

The following papers discuss how to improve model performance by refinement and feedback:

- Madaan. et. al. 2023. Self-refine: Iterative refinement with self-feedback

- The model can refine and improve its own reasoning in multiple scenarios including code optimization, math reasoning, dialog response generation and so on.

- Madaan et. al. 2023. Learning Performance-Improving Code Edits

- Training on trajectory of programs improves coding.

The short take is that refinement and feedback in the form of natural language (v.s. in the form of a scalar as is in RL) is very effective for further improving language models (either by in-context learning or fine-tuning).

4 - Evaluating language models’ reasoning abilities

Having discussed the recipe for training strong models and techniques for prompting, now we discuss the evaluation of language models’ reasoning.

4.1 - Evaluation basics

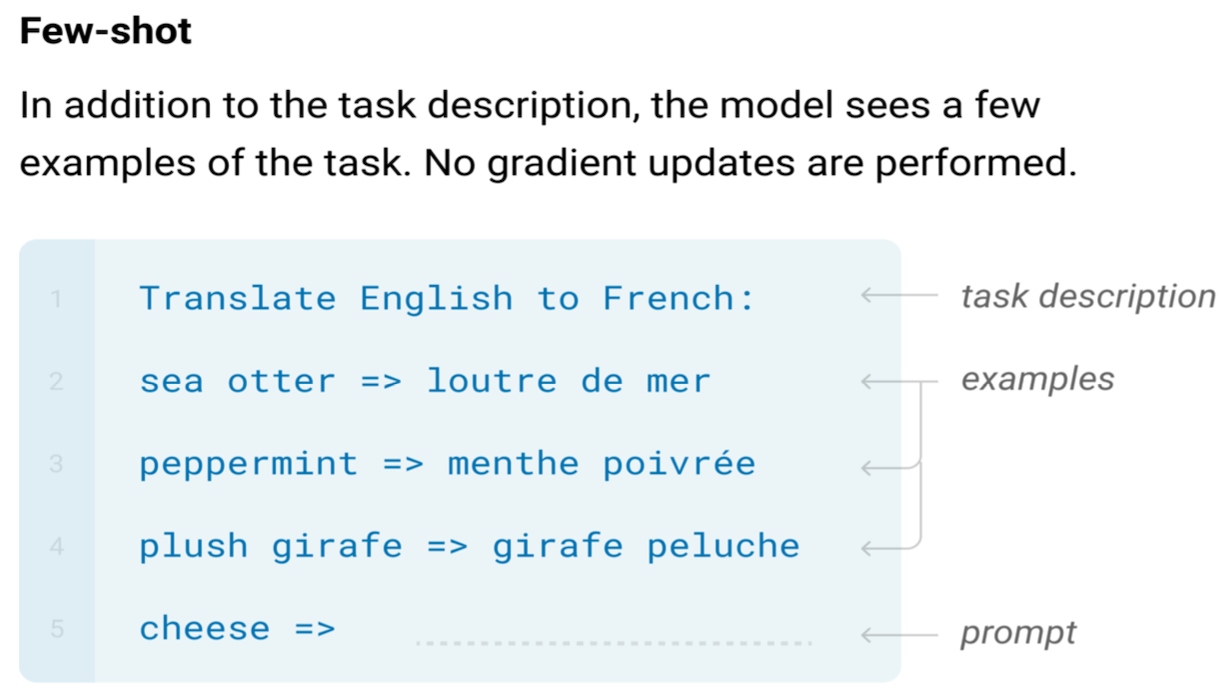

When talking about evaluation, there are three important factors to consider: data formats, type of abilities, and type of model. First, four data formats when prompting:

where:

- In-context means one prepend the test questions with a list of in-context demonstrations.

- Zero-shot means directly feed the test question to the model without in-context demonstrations.

- Chain-of-thought means generating reasoning before the answer.

- Answer-only means without chain-of-thought.

There are typically two types of abilities that are approximately orthogonal to each other:

- Knowledge: whether the model knows things of the world

- Reasoning: whether the model can perform reasoning upon its knowledge.

These two things are not strictly orthogonal to each other because some rules for reasoning can also be viewed as some form of knowledge. Yet these two abilities have clear differences when evaluating:

- Some datasets focus more on the evaluation of knowledge, such as MMLU which tests if the model has the knowledge up to college-level.

- Some datasets focus more on the evaluation of reasoning, such as BBH, which tests if the model has the abilities to solve problems step by step.

- For knowledge, chain-of-thought performs similarly to answer-only (see FlanPaLM paper)

- For reasoning, chain-of-thought performs significantly better than answer-only (see original CoT paper and then FlanPaLM paper)

In practice, because CoT performs on par with (in knowledge) or better than (in reasoning) answer-only, plus CoT is more user-friendly (because it tells the user thinking process) modern chatbots always deployed with CoT (whatever you ask ChatGPT it tells you a lot of reasoning).

Finally, for evaluation, we differentiate two types of models: pretrained checkpoints and instruction-tuned checkpoints.

- Pretrained checkpoints have the ability to do in-context learning. Most of the pretrained models can do in-context answer-only, some better models can do in-context chain-of-thought (yet it is still unclear why some pretrained models can do CoT while others cannot). Yet pretrained checkpoints may not be also to do zero-shot because they are not trained to do so (but some pretrained checkpoints can still do zero-shot CoT, see the “let’s think step by step” paper).

- Instruction-tuned checkpoints have both the ability to do zero-shot and in-context prompting. A bit of caveat here is that if not tuned properly, the in-context learning performance may drop a bit after instruction tuning.

After all these discussion, we recommend using in-context chain-of-thought for evaluation

- In-context learning is a better method for evaluating pretrained checkpoints because it better reveals the model potential. Zero-shot may underestimate model performance especially for models that does not support a magic spell (”let’s think step by step”) for zero-shot chain-of-thought.

- Chain-of-thought prompting is a better method for evaluating reasoning ability because it fully releases the model’s reasoning performance than answer-only prompting

4.2 - Introducing Chain-of-thought hub

chain-of-thought-hub

FranxYao • Updated Nov 14, 2025

Having discussed all the evaluation basics, we introduce chain-of-thought hub, an on-going effort as the unified platform for evaluating language models’ reasoning abilities. We compile a list of complex reasoning tasks including math (GSM8K), science (MATH), symbolic (BBH), knowledge (MMLU), to measure which models are really better. Below is a quick glance of the leaderboard. Many of the numbers are still yet to be filled, but the current form still gives a sense of models’ capability ranking.

Model | # Params | GSM8K | MATH | MMLU | BBH |

gpt-4 | ? | 92.0 | 42.5 | 86.4 | - |

claude-v1.3 | ? | 81.8 | - | 74.8 | - |

gpt-3.5-turbo | ? | 78.9 | - | 67.3 | 70.1 |

claude-instant | ? | 74.8 | - | - | - |

text-davinci-003 | ? | - | - | 64.6 | 70.7 |

code-davinci-002 | ? | 66.6 | 19.1 | 64.5 | 73.7 |

Minerva | 540B | 58.8 | 33.6 | - | - |

Flan-PaLM | 540B | - | - | 70.9 | 66.3 |

Flan-U-PaLM | 540B | - | - | 69.8 | 64.9 |

PaLM | 540B | 56.9 | 8.8 | 62.9 | 62.0 |

text-davinci-002 | ? | 55.4 | - | 60.0 | 67.2 |

PaLM | 64B | 52.4 | 4.4 | 49.0 | 42.3 |

LLaMA | 65B | 50.9 | 10.6 | 63.4 | - |

LLaMA | 33B | 35.6 | 7.1 | 57.8 | - |

LLaMA | 13B | 17.8 | 3.9 | 46.9 | - |

Flan-T5 | 11B | 16.1 | - | 48.6 | 41.4 |

LLaMA | 7B | 11.0 | 2.9 | 35.1 | - |

Generally:

- We rank the model performance by GSM8K, the classical benchmark measuring chain-of-thought math reasoning performance. This is definitely not the only metric, but a good interpretation is "how good the model can do math while maintaining other generic abilities" -- which is also very hard.

- GPT-4 clearly outperforms all other models on GSM8K and MMLU while Claude is the only model family that is comparable to GPT family.

- The 65B LLaMA is very close to text/code-davinci-002, which means that based on it, if SFT and RLHF are done correctly, it is very likely that we could reproduce ChatGPT based on the 65B LLaMA

- Smaller models, like FlanT5 11B and LLaMA 7B, clearly lag behind the leaderboard, meaning that complex reasoning may only be the privilege of large models.

We further note that in the github repo, we include:

- Detailed experimental setup and result analysis

- Scripts for reproducing all results of GPT and Claude

We encourage the readers to check it out :)

5 - Conclusion

In this post, we discuss the reasoning abilities of large language models. Complex reasoning is important not only because it is the number 1 differentiator of stronger v.s. weaker models, but also it serves as the foundation for the model to become next-generation computation platform/ operating system, such that it can foster a new ecosystem upon it.

We discuss the recipe for building models of strong reasoning abilities: pretraining, supervised fine-tuning, and reinforcement learning. We find it intriguing that the recipe for improving reasoning is closely related to the recipe for improving coding, which deepens our previous hypothesis of the close relationship between reasoning and coding. We further discusses advanced prompting engineering techniques and analytics of the model behavior when performing complex reasoning. Finally, we discuss how to evaluate the models’ reasoning abilities, and introduce Chain-of-thought Hub, an on-going effort towards unified evaluation of language models’ reasoning performance.

We hope this post serves as the roadmap towards building open-sourced models with strong reasoning abilities.

Millions of hours in the history of the world must always be wasted, before a truly historic hour, a decisive hour of mankind, comes into being. —— Decisive Moments in History by Stefan Zweig

Appendix: More resources in large language model reasoning

- Lil’Log 2023. Prompt Engineering

- Anthropic Claude Prompt Troubleshooting

- Microsoft Semantic Kernel

- Prompt Engineering Guide

- Huang and Chang 2022. Towards Reasoning in Large Language Models: A Survey

- Utterance